Alan Turing, a name synonymous with the birth of computer science, left an indelible mark on the 20th century. His conceptualization of the Turing Machine, a theoretical computing device, laid the groundwork for modern computers and the digital age. This article explores the life, work, and lasting impact of this mathematical genius, focusing particularly on the revolutionary Turing Machine.

Turing’s Early Life and Intellectual Development

Born in London in 1912, Alan Turing exhibited a remarkable aptitude for mathematics and science from a young age. He pursued his passion at King’s College, Cambridge, where he studied mathematics and developed an interest in logic and computability. It was during this period that he began to grapple with the fundamental questions about the nature of computation, which would eventually lead to his groundbreaking invention.

The Genesis of the Turing Machine

In 1936, Turing published his seminal paper “On Computable Numbers, with an Application to the Entscheidungs problem.” In this paper, he introduced the concept of the Turing Machine, a theoretical device capable of performing any computation that can be described by an algorithm. The Turing Machine consists of an infinite tape divided into cells, a read/write head that can move along the tape, and a set of rules that dictate the machine’s behavior. Despite its simplicity, the Turing Machine is a universal computing device, meaning that it can simulate any other computing device.

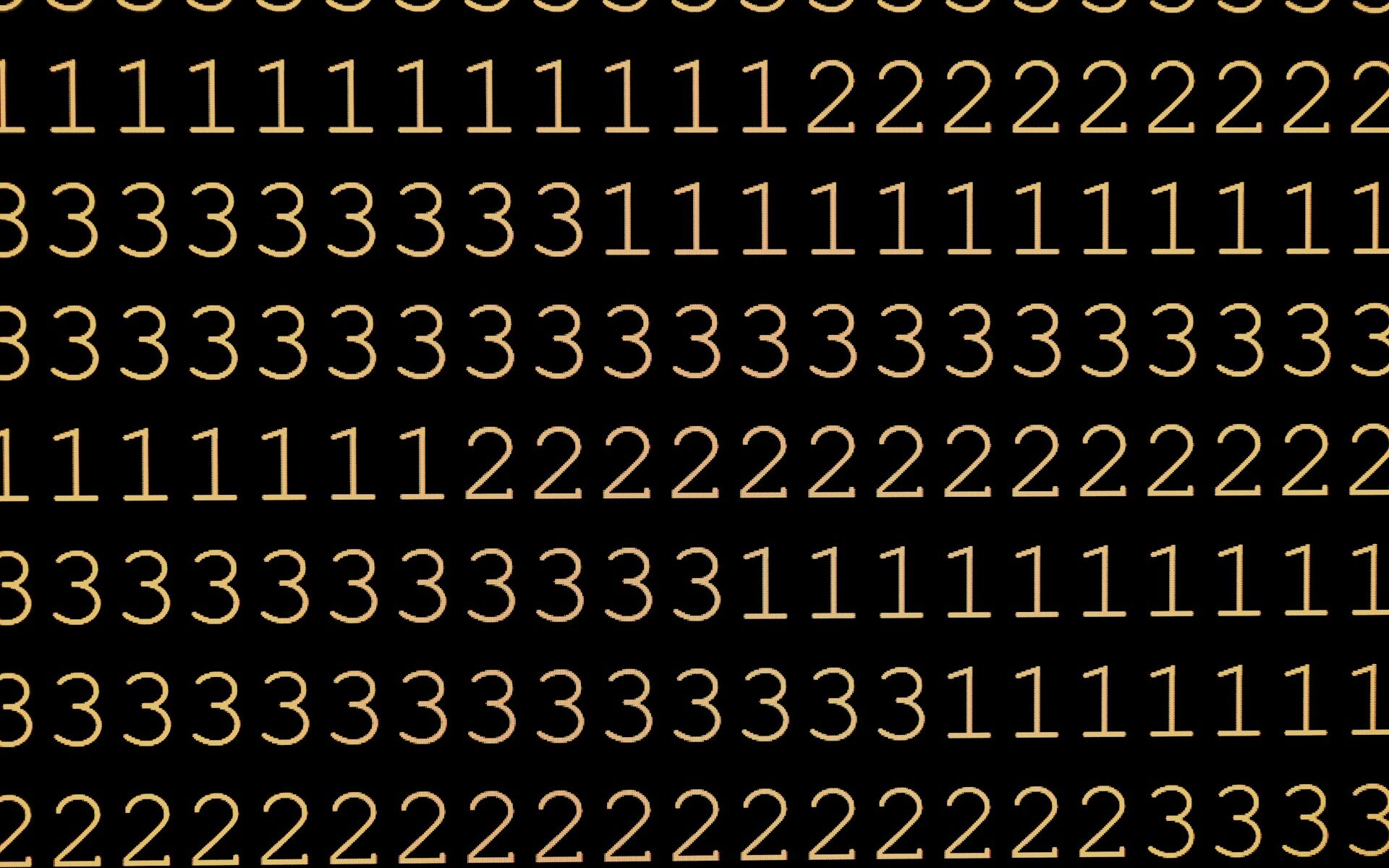

How the Turing Machine Works

The Turing Machine operates by reading symbols from the tape, writing symbols onto the tape, and moving the read/write head left or right. The machine’s behavior is determined by its current state and the symbol it reads from the tape. Based on these two factors, the machine transitions to a new state, writes a new symbol onto the tape, and moves the read/write head. By repeating these steps, the Turing Machine can perform complex computations. The beauty of the Turing Machine lies in its ability to reduce computation to a set of simple, mechanical operations. This conceptual breakthrough paved the way for the development of actual computers.

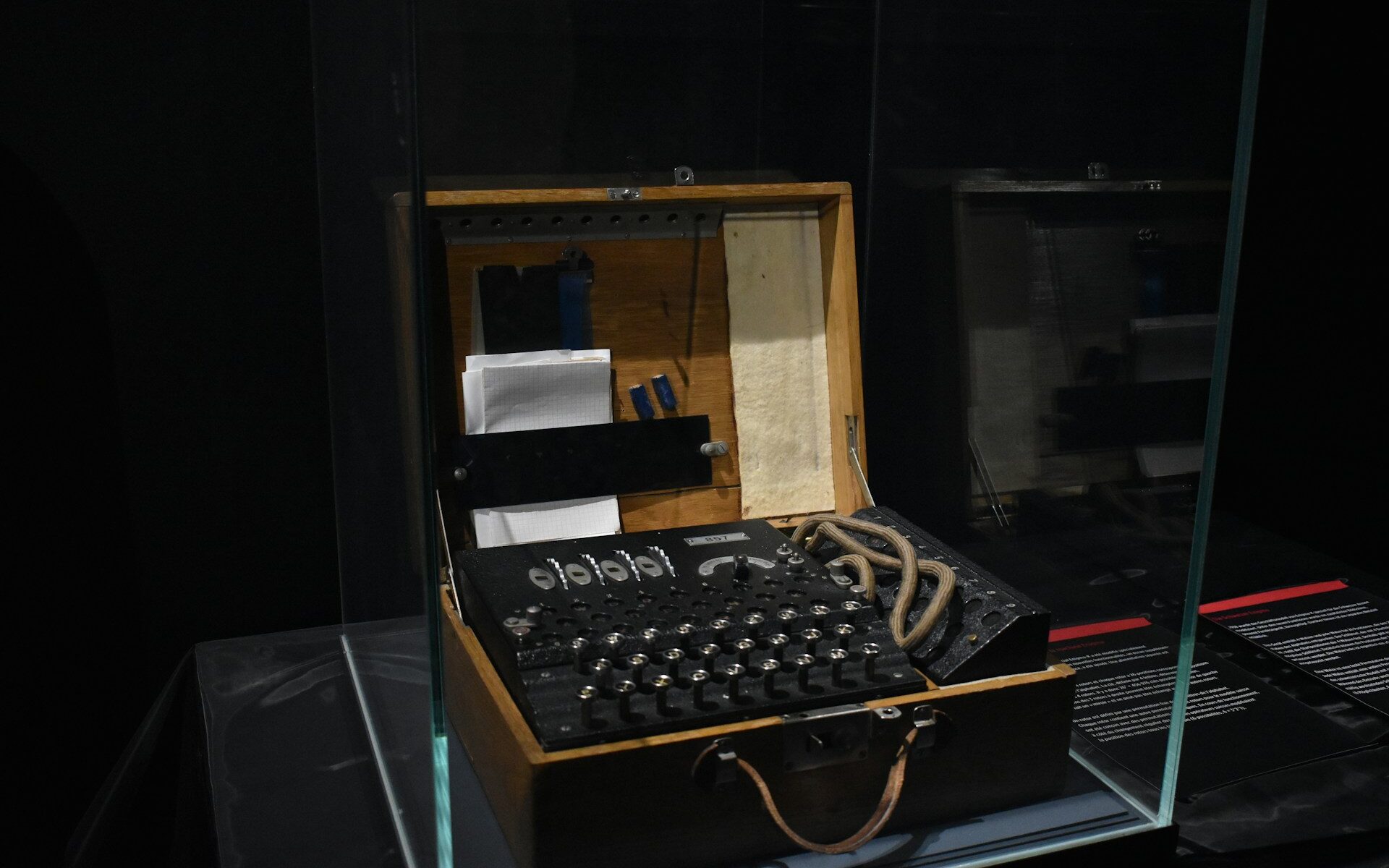

Turing’s Codebreaking Work at Bletchley Park

During World War II, Turing played a pivotal role in the Allied war effort as a codebreaker at Bletchley Park. He was instrumental in cracking the Enigma code, used by the German military to encrypt their communications. Turing’s work at Bletchley Park not only helped to shorten the war but also demonstrated the practical applications of his theoretical work on computation. The codebreaking machines he designed were early examples of electronic computers, and they laid the foundation for the development of modern cryptography.

The Turing Test and Artificial Intelligence

After the war, Turing continued to explore the possibilities of computation and artificial intelligence. He is best known for proposing the Turing Test, a test of a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human. The Turing Test has been a major influence on the field of artificial intelligence, and it continues to be a topic of debate and research today.

Turing’s Legacy and Lasting Impact

Alan Turing’s contributions to mathematics, computer science, and artificial intelligence are immeasurable. The Turing Machine remains a cornerstone of computer science, and his work on codebreaking and artificial intelligence has had a profound impact on society. Despite facing personal hardships and discrimination, Turing left a legacy that continues to inspire scientists, engineers, and mathematicians around the world. His visionary ideas shaped the digital age and continue to drive innovation in computer science.